1. 개요

- Kubernets를 업그레이드하기 위하여 영향이 높은 소프트웨어에 대한 호환성을 검토하였다.

Dashboard, metrics-server, Kubeflow, istio, knative

- Kubeflow 호환성 만을 고려했을 때 Kubernetes 1.19를 선택해야 하지만, 자사의 컨테이너 플랫폼(Flyingcube)의 업그레이드 전략을 고려해서 1.20을 진행하였다. (5.c 참조)

- 업그레이드 진행 시점(21.10.20)의 최신 버전은 Kubernetes 1.22.2이다

2. Environments

- Kubernetes 1.16.15

- Ubuntu 18.04.5, Docker 19.3.15

$ k get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

acp-master01 Ready master 185d v1.16.15 10.214.90.96 <none> Ubuntu 18.04.5 LTS 5.4.0-71-generic docker://19.3.15

acp-master02 Ready master 185d v1.16.15 10.214.90.97 <none> Ubuntu 18.04.5 LTS 5.4.0-70-generic docker://19.3.15

acp-master03 Ready master 185d v1.16.15 10.214.90.98 <none> Ubuntu 18.04.5 LTS 5.4.0-70-generic docker://19.3.15

acp-worker01 Ready <none> 138d v1.16.15 10.214.35.103 <none> Ubuntu 18.04.5 LTS 5.4.0-77-generic docker://19.3.15

acp-worker02 Ready <none> 185d v1.16.15 10.214.35.106 <none> Ubuntu 18.04.5 LTS 5.4.0-73-generic docker://19.3.15

acp-worker03 Ready <none> 161d v1.16.15 10.214.35.116 <none> Ubuntu 18.04.5 LTS 5.4.0-73-generic docker://19.3.15

$

3. Kubernetes 업그레이드

- 업그레이드 단계

✓ Kubernetes 업그레이드는 특정 Minor 버전에서 다음 단계 Mnitor+1 버전으로만 가능한다.

1.16.15에서 1.21.5로 업그레이드하기 위해서는 아래와 같이 총 5번 업그레이드를 거쳐야 한다.

1.16.15 ⇢ 1.17.17 ⇢ 1.18.20 ⇢ 1.19.15 ⇢ 1.20.11

- 업그레이드 컴포넌트 내역 (1.16.15 ⇢ 1.17.17 단계)

COMPONENT CURRENT AVAILABLE

API Server v1.16.15 v1.17.17

Controller Manager v1.16.15 v1.17.17

Scheduler v1.16.15 v1.17.17

Kube Proxy v1.16.15 v1.17.17

CoreDNS 1.6.2 1.6.5

Etcd 3.3.15 3.4.3-0

- 업그레이드 흐름 및 명령어 (1.16.15 ⇢ 1.17.17 ⇢ 1.18.20 ⇢ 1.19.15 ⇢ 1.20.11)

버전별 업그레이드 흐름은 다음과 같다. 자세한 수행 결과는 아래 첨부 파일을 참고하기 바란다.

방화벽때문에 k8s.gcr.io로부터 Docker cotainer image를 직접 다운로드할 수 없었기 때문에 수동으로 로딩하였다.

✓ Upgrade the primary control plane node. (Node: acp-master01)

a. apt-update && apt-cache madison kubeadm | grep 1.17. | head -n 3

b. apt-mark unhold kubeadm && apt-get install -y kubeadm=1.17.17-00 && apt-mark hold kubeadm

c. kubeadm upgrade plan

d. docker load -i {docker container images}

e. kubeadm upgrade apply v1.17.17

f. kubectl drain acp-master01 --ignore-daemonsets --delete-local-data

g. apt-mark unhold kubelet kubectl && apt-get install -y kubelet=1.17.17-00 kubectl=1.17.17-00 && apt-mark hold kubelet kubectl

h. systemctl restart kubelet

i. kubectl uncordon acp-master01

✓ Upgrade additional control plane nodes. (Node: acp-master02, Node: acp-master03)

a. apt-mark unhold kubeadm && apt-get install -y kubeadm=1.17.17-00 && apt-mark hold kubeadm

b. kubeadm upgrade plan

c. docker load -i {docker container images}

d. kubeadm upgrade node

e. kubectl drain acp-master02 --ignore-daemonsets --delete-local-data

f. apt-mark unhold kubelet kubectl && apt-get install -y kubelet=1.17.17-00 kubectl=1.17.17-00 && apt-mark hold kubelet kubectl

g. systemctl restart kubelet

h. kubectl uncordon acp-master02

✓ Upgrade worker nodes. (Node: acp-worker01, acp-worker02, acp-worker03)

a. apt-mark unhold kubeadm && apt-get install -y kubeadm=1.17.17-00 && apt-mark hold kubeadm

b. kubeadm upgrade plan

c. docker load -i {docker container images}

d. kubeadm upgrade node

e. kubectl drain acp-worker01 --ignore-daemonsets --delete-local-data

f. apt-mark unhold kubelet kubectl && apt-get install -y kubelet=1.17.17-00 kubectl=1.17.17-00 && apt-mark hold kubelet kubectl

g. systemctl restart kubelet

h. kubectl uncordon acp-worker01

- Docker container image 로딩 대상 및 배포 노드

docker pull ⇢ docker save ⇢ sftp ⇢ docker load

Docker container image 배포 대상 node

------------------------------------------- ------------------------------

k8s.gcr.io/kube-apiserver:v1.17.17 control plane nodes

k8s.gcr.io/kube-controller-manager:v1.17.17 control plane nodes

k8s.gcr.io/kube-scheduler:v1.17.17 control plane nodes

k8s.gcr.io/kube-proxy:v1.17.17 control plane nodes & worker nodes

k8s.gcr.io/etcd:3.4.3 control plane nodes

k8s.gcr.io/coredns:1.6.5 control plane nodes & worker nodes

- 참조

https://v1-17.docs.kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

https://v1-18.docs.kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

https://v1-19.docs.kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

https://v1-20.docs.kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

https://v1-21.docs.kubernetes.io/docs/tasks/administer-cluster/kubeadm/kubeadm-upgrade/

4. CNI 업그레이드

- CNI(Container Networking Interface)로 flannel v0.11.0를 사용 중이며, Kubernetes 1.20.11로 업그레이드 이후 flannel v.0.14.0로 업그레이드하였다.

$ k describe pod -n kube-system -l app=flannel | grep Image: | head -n 1

Image: quay.io/coreos/flannel:v0.11.0-amd64

$

- flannel 업그레이드 : v0.11.0 (29 Jan 2019) ⇢ v.0.14.0 (27 Aug 21)

https://github.com/flannel-io/flannel/blob/master/Documentation/upgrade.md

$ k get daemonsets.apps -n kube-system | egrep 'NAME|kube-flannel'

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-flannel-ds-amd64 6 6 6 2 6 <none> 179d

kube-flannel-ds-arm 0 0 0 0 0 <none> 179d

kube-flannel-ds-arm64 0 0 0 0 0 <none> 179d

kube-flannel-ds-ppc64le 0 0 0 0 0 <none> 179d

kube-flannel-ds-s390x 0 0 0 0 0 <none> 179d

$ k get pod -A -o wide | egrep 'NAME|flannel'

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system kube-flannel-ds-amd64-4n7xd 1/1 Running 2 179d 10.214.90.96 acp-master01 <none> <none>

kube-system kube-flannel-ds-amd64-c9fsv 1/1 Running 72 138d 10.214.35.103 acp-worker01 <none> <none>

kube-system kube-flannel-ds-amd64-d4w8w 1/1 Running 53 161d 10.214.35.116 acp-worker03 <none> <none>

kube-system kube-flannel-ds-amd64-dhvsw 1/1 Running 47 179d 10.214.35.106 acp-worker02 <none> <none>

kube-system kube-flannel-ds-amd64-g27kz 1/1 Running 0 179d 10.214.90.98 acp-master03 <none> <none>

kube-system kube-flannel-ds-amd64-pwngb 1/1 Running 0 179d 10.214.90.97 acp-master02 <none> <none>

$

$ k delete -f https://raw.githubusercontent.com/coreos/flannel/v0.12.0/Documentation/kube-flannel.yml

podsecuritypolicy.policy "psp.flannel.unprivileged" deleted

Warning: rbac.authorization.k8s..io/v1beta1 ClusterRole is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRole

clusterrole.rbac.authorization.k8s.io "flannel" deleted

Warning: rbac.authorization.k8s..io/v1beta1 ClusterRoleBinding is deprecated in v1.17+, unavailable in v1.22+; use rbac.authorization.k8s.io/v1 ClusterRoleBinding

clusterrolebinding.rbac.authorization.k8s.io "flannel" deleted

serviceaccount "flannel" deleted

configmap "kube-flannel-cfg" deleted

daemonset.apps "kube-flannel-ds-amd64" deleted

daemonset.apps "kube-flannel-ds-arm64" deleted

daemonset.apps "kube-flannel-ds-arm" deleted

daemonset.apps "kube-flannel-ds-ppc64le" deleted

daemonset.apps "kube-flannel-ds-s390x" deleted

$$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.14.0/Documentation/kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

$

$ k get pod -n kube-system -o wide | egrep 'NAME|flannel'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-flannel-ds-6jqnx 1/1 Running 0 22m 10.214.90.97 acp-master02 <none> <none>

kube-flannel-ds-88nmf 1/1 Running 0 22m 10.214.35.116 acp-worker03 <none> <none>

kube-flannel-ds-fs9r9 1/1 Running 0 65s 10.214.35.103 acp-worker01 <none> <none>

kube-flannel-ds-lkkg6 1/1 Running 0 22m 10.214.35.106 acp-worker02 <none> <none>

kube-flannel-ds-pvh7k 1/1 Running 0 22m 10.214.90.96 acp-master01 <none> <none>

kube-flannel-ds-xj9dk 1/1 Running 0 22m 10.214.90.98 acp-master03 <none> <none>

acp@acp-master01:~$

5. Kubeflow 1.2 오류 및 조치

- Kubernetes 1.20.11로 업그레이드 이후 Kubeflow와 istio에서 다음과 같이 에러가 발생되었다.

에러 : MountVolume.SetUp failed for volume "istio-token" : failed to fetch token: the API server does not have TokenRequest endpoints enabled

$ k get pod -A -o wide | egrep -v 'Run|Com|Term'

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

istio-system istio-ingressgateway-85d57dc8bc-dn8g6 0/1 ContainerCreating 0 14h <none> acp-worker01 <none> <none>

istio-system istio-pilot-77bc8867cf-hs6kg 0/2 ContainerCreating 0 14h <none> acp-worker01 <none> <none>

istio-system istio-policy-59d86bdf79-n7v2h 0/2 ContainerCreating 0 14h <none> acp-worker01 <none> <none>

istio-system istio-telemetry-6854d9f564-jgt6m 0/2 ContainerCreating 0 14h <none> acp-worker01 <none> <none>

kubeflow cache-deployer-deployment-6449c7c666-684qh 0/2 Init:0/1 0 15h <none> acp-worker02 <none> <none>

kubeflow cache-server-5f59f9c4b6-p8d9z 0/2 Init:0/1 0 14h <none> acp-worker03 <none> <none>

kubeflow kfserving-controller-manager-0 1/2 CrashLoopBackOff 174 14h 10.244.5.124 acp-worker03 <none> <none>

kubeflow metadata-writer-576f7bc46c-ndf7w 0/2 Init:0/1 0 14h <none> acp-worker01 <none> <none>

kubeflow ml-pipeline-544647c564-x8mcc 0/2 Init:0/1 0 14h <none> acp-worker03 <none> <none>

kubeflow ml-pipeline-persistenceagent-78b594c68-k85pt 0/2 Init:0/1 0 15h <none> acp-worker02 <none> <none>

kubeflow ml-pipeline-scheduledworkflow-566ff4cd4d-78g9j 0/2 Init:0/1 0 14h <none> acp-worker03 <none> <none>

kubeflow ml-pipeline-ui-5499f67d-wlbxc 0/2 Init:0/1 0 14h <none> acp-worker03 <none> <none>

kubeflow ml-pipeline-viewer-crd-bdbb7d7d6-6vwp9 0/2 Init:0/1 0 14h <none> acp-worker03 <none> <none>

kubeflow ml-pipeline-visualizationserver-769546b47b-4gw4x 0/2 Init:0/1 0 15h <none> acp-worker02 <none> <none>

kubeflow mysql-864f9c758b-chtvw 0/2 Init:0/1 0 14h <none> acp-worker01 <none> <none>

$ k describe pod istio-ingressgateway-85d57dc8bc-dn8g6 -n istio-system

...

Volumes:

istio-token:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 43200

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedMount 28m (x58 over 15h) kubelet Unable to attach or mount volumes: unmounted volumes=[istio-token], unattached volumes=[ingressgateway-ca-certs istio-ingressgateway-service-account-token-5bhjb sdsudspath istio-token istio-certs ingressgateway-certs]: timed out waiting for the condition

Warning FailedMount 23m (x62 over 15h) kubelet Unable to attach or mount volumes: unmounted volumes=[istio-token], unattached volumes=[istio-token istio-certs ingressgateway-certs ingressgateway-ca-certs istio-ingressgateway-service-account-token-5bhjb sdsudspath]: timed out waiting for the condition

Warning FailedMount 13m (x463 over 15h) kubelet MountVolume.SetUp failed for volume "istio-token" : failed to fetch token: the API server does not have TokenRequest endpoints enabled

Warning FailedMount 7m41s (x59 over 15h) kubelet Unable to attach or mount volumes: unmounted volumes=[istio-token], unattached volumes=[istio-ingressgateway-service-account-token-5bhjb sdsudspath istio-token istio-certs ingressgateway-certs ingressgateway-ca-certs]: timed out waiting for the condition

Warning FailedMount 3m11s (x96 over 15h) kubelet Unable to attach or mount volumes: unmounted volumes=[istio-token], unattached volumes=[sdsudspath istio-token istio-certs ingressgateway-certs ingressgateway-ca-certs istio-ingressgateway-service-account-token-5bhjb]: timed out waiting for the condition

acp@acp-master01:~$

- istio-token은 Kubernetes API를 호출할 때 자격 증명을 위해 사용하는 토큰이다.

$ k get pod istio-ingressgateway-85d57dc8bc-dn8g6 -n istio-system -o yaml

...

volumes:

- name: istio-token

projected:

defaultMode: 420

sources:

- serviceAccountToken:

audience: istio-ca

expirationSeconds: 43200

path: istio-token

...

$

- 위 기능을 활성화하기 위하여 Kubeflow 설치 시에 설정하였던 내용이 Kubernetes 업그레이드 과정에서 삭제되었다.

service-account-issuer, service-account-signing-key-file

Control plane node (acp-master01, acp-master02, acp-master03)에서 아래와 같이 추가하여 조치하였다.

$ sudo vi /etc/kubernetes/manifests/kube-apiserver.yaml

...

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-issuer=kubernetes.default.svc # appended

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key # appended

...

$

6. 호환성 (Campitibility) 검토

a. Kubernetes dashboard (참조 문서: Dashboard on bare-metal)

- 호환성 검토

v.2.0.0-rc3은 kubernetes 1.16까지만 호환성을 지원하기 때문에 v.2.4.0으로 업그레이드한다.

https://github.com/kubernetes/dashboard/releases

Dashabord Kubernetes Campatibility

v.2.0.0-rc3 (설치버전) 1.16.15

v.2.4.0 (15 Oct 21) 1.20, 1.21

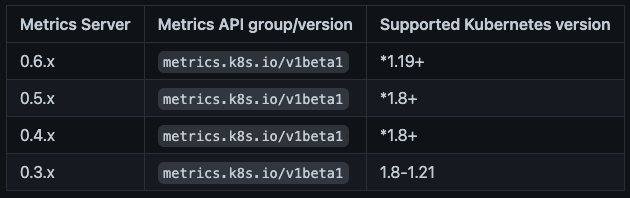

b. metrics-server (참조문서: Metrics-server)

- 호환성 검토

metrics-server 0.3.7를 사용 중이며 Kubernetes 1.21까지 지원하기 때문에 반듯이 업그레이를 할 필요는 없다.

c. Kubeflow (참조문서:Kubeflow 1.2 in On-prem 구성)

- Kubeflow 호환성 검토

✓ 아래와 같은 이유로 Kubeflow를 1.2에서 1.4로 업그레이드하고자 한다.

Kubeflow 1.2가 Kubernetes 1.20에서 전체 테스트 및 검증이 되지 않았음

Kubeflow 1.2에서 사용 중인 Knavite와 Istio 호환성을 고려했을 때 권장 버전이 아님

Kubeflow 1.4에 추가된 기능이 필요함

✓ Kubeflow 1.4에 대한 Kubernetes 호환성 정보를 찾을 수 없었다. 아래 사이트를 통해 Kubeflow 1.4가 Kubernetes 1.19에서 테스트되었다고 확인할 수 있었다.

https://github.com/kubeflow/manifests

✓ Kubeflow 호환성 만을 고려했을 때 Kubernetes 1.19로 진행 해야 하지만, 자사의 컨테이너 플랫폼(Flyingcube)의 업그레이드 전략을 고려해서 1.20으로 업그레이드를 진행하였다.

- Kubeflow 호환성

사용중인 Kubeflow 1.2는 Kubernetes 1.14~1.16에서 전체 테스트 및 검증되었으며, Kubernetes 1.20에서는 전체 테스트가 진행되지 않았고 알려진 이슈는 없다.

- Istio/Knative 호환성

- Kubeflow 버전별 Knative, Istio 버전

Istio 호환성과 Knative 호환성을 고려했을 때

Kubeflow Knative Istio

1.2 0.14.3 1.3

1.3 0.17.4 1.9

1.4 0.22.1 1.9.6

'Kubernetes > Install' 카테고리의 다른 글

| K8s 구성 - AWS (0) | 2023.08.30 |

|---|---|

| GPU Operator Install on Ubuntu (0) | 2021.09.21 |

| GPU Operator on CentOS (0) | 2021.09.21 |

| Helm (0) | 2021.09.21 |

| MetalLB (0) | 2021.09.15 |

댓글