1. 개요

- AWS에서 Amazon EC2에 Kubernetes를 구성하는 절차를 간단하게 설명하고자 합니다.

- 구성 환경은 Amazon EC2(Redhat 9.2), CRI-O 1.25.4, Kubernetes 1.28, flannel 0.22.2입니다.

- AWS는 관리형 서비스인 Amazon EKS(Elastic Kubernetes Service)와 Amazon ECS(Elastic Container Service)를 제공하고 있습니다.

2. AWS EC2 구성

2.1 Key pair 생성

Kubernetes를 설치하기 위하여 Amazon EC2에 접속할 때 사용할 키를 생성합니다.

- 메뉴

EC2 console > Network & Security > Key Pairs > Create key pair

- 설정 내용

- 추가 작업

PC(Mac)에 저장된 키를 이용하여 Amazon EC2에 접속하기 위해서 아래와 같이 사전 작업을 수행합니다.

➜ .ssh pwd

/Users/yoosung/.ssh

➜ .ssh mv ~/Downloads/410073890645_us-east-1.pem .

➜ .ssh chmod 600 410073890645_us-east-1.pem

➜ .ssh

2.2 Security Group 생성

Amazon EC2와 Amazon ELB(Elastic Load Balancing)에서 사용할 Security group을 생성합니다.

- 메뉴

EC2 console > Network & Security > Security Groups > Create security group)

- Security group name: lab-k8s-nlb

Amazon ELB에 적용할 security group입니다. 누구나 Kubernetes API Server에 접근할 수 있도록 Inbound 룰을 설정합니다.

- Security group name: lab-k8s-control-sg

Kubernetes control plane node에 적용할 security group입니다.

Kubernetes 구성을 위하여 Amazon EC2(22 포트)에 접속할 수 있도록 소스로 MyIP를 추가하였습니다. 각자의 환경에 맞게 수정해야 됩니다.

Kubernetes API Server를 위한 Amazon ELB(6443 포트)에서 접속할 수 있도록 ELB의 security group ID를 추가하였습니다.

Kubernetes control plane과 node 간에 접속할 수 있도록 설치되는 VPC의 CIDR를 추가하였습니다. 각자의 환경에 맞게 수정해야 합니다.

- Security group name: lab-k8s-node-sg

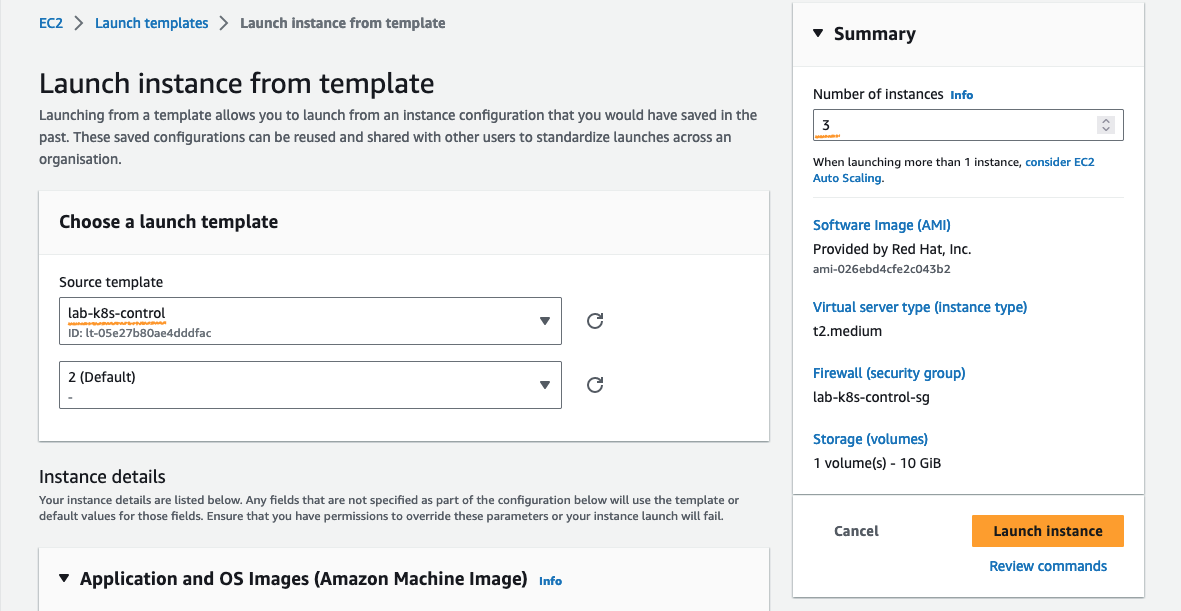

2.3 launch template 생성

- 메뉴

EC2 Console > Instances > Instance Templates > Create launch template

- Launch template name: lab-k8s-control

✓ 설정내용

Spec.: RHEL 9, 64bit (x86), t2.medium(2 vCPU, 4GiB), SSD 10 GiB

Env.: lab-k8s-control-sg(Security group), 410073890645_us-east-1 (Key pair)

- Launch template name: lab-k8s-node

✓ 설정내용

Spec.: RHEL 9, 64bit (x86), r5.2xlarge(8 vCPU, 64 GiB), SSD 10 GiB

Env.: lab-k8s-node-sg(Security group), 410073890645_us-east-1 (Key pair)

2.4 Amazon EC2 인스턴스 생성

- 메뉴

EC2 console > Instances > Instance Templates > {lab-k8s-control | lab-k8s-node} > Actions > Launch instance from template

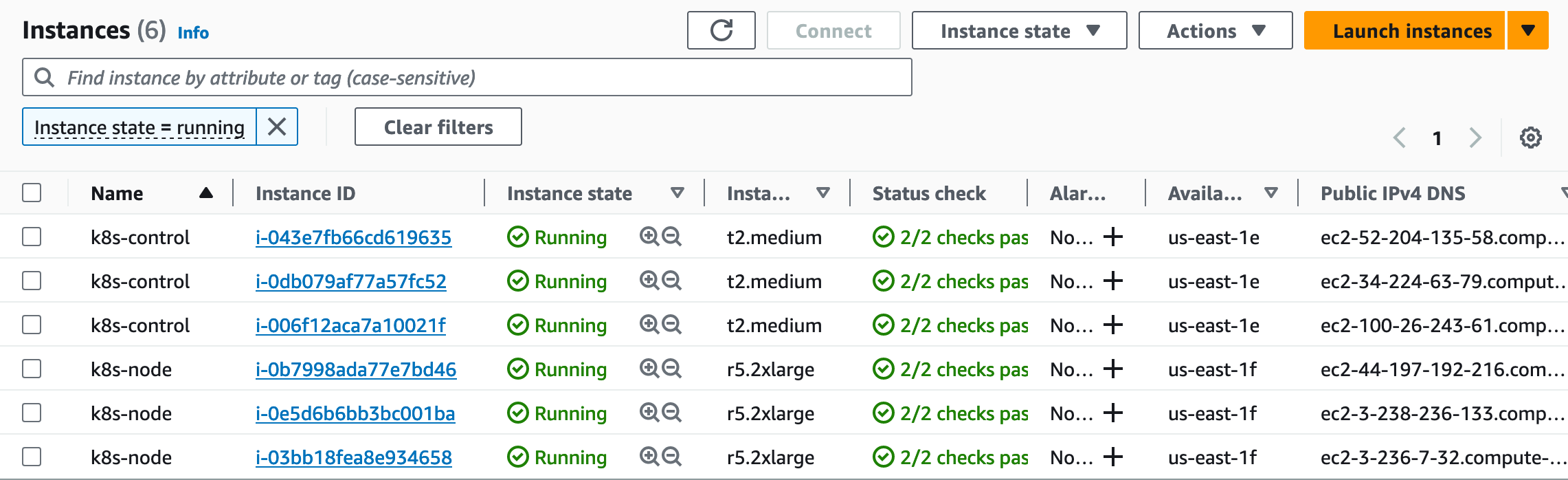

- Kuberneters Control 3대, Kuberneters Node 3대 생성

- 생성 결과

2.5 Amazon ELB 생성

2.5.1 타겟 그룹 생성

- 메뉴

EC2 console > Load balancing > Target groups > Create target group

- 설정 내용

Choose a target type: Instances

Target group name: lab-k8s-control

Protocol / Port: TCP / 6443

VPC: default

Health check protocol: TCP

Available instances: Name(K8s-Control) 3대 선택

Ports for the selected instances: 6443

Include as pending below 선택

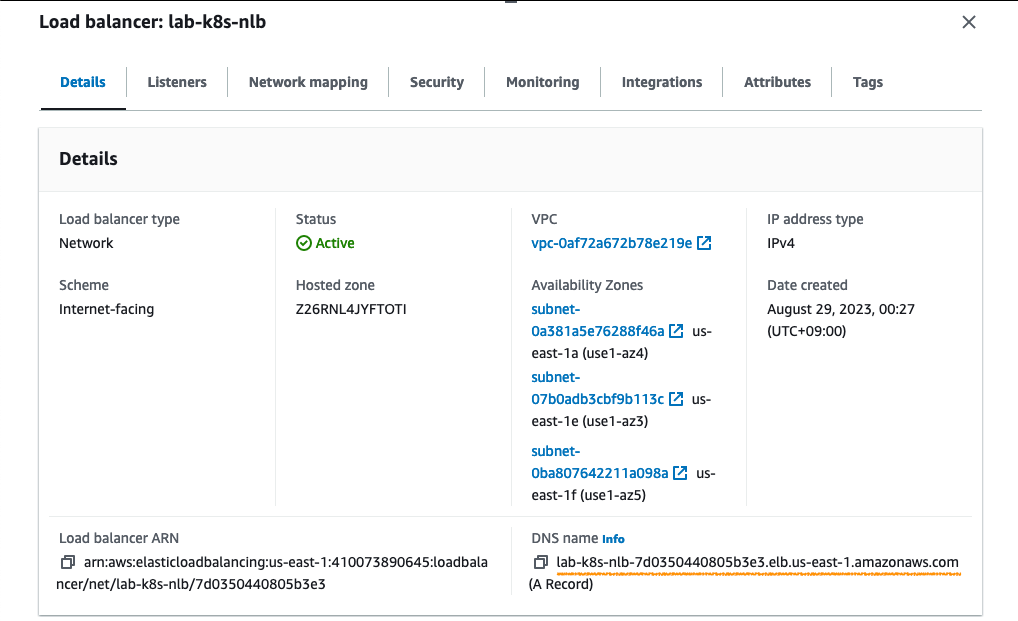

2.5.2 Load balancer 생성

- 메뉴

EC2 console > Load balancing > Target groups > Load Balancers > Create Load Balancer

- 설정 내용

Load balancer types: NLB

Load balancer name: lab-k8s-nlb

Scheme: Internet-facing

IP address type: IPv4

VPC: default

Mappings: 고 가용성을 고려해서 2개 이상 AZ 선택

본 예제에서는 EC2 인스턴스가 배포된 us-east-1e, us-east-1f를 포함한 3개 선택

Security groups: lab-k8s-nlb

Listener: TCP / 6443

Target group: lab-k8s-control

- 구성 결과

생성한 Amazon ELB의 DNS name은 Kubernetes를 구성하기 위한 kubeadm 명령어의 인자로 사용됩니다.

3. K8s 구성

- 본 Kubernetes 구성 내역은 아래 문서를 참조하였으며, 최대한 간단하게 작성하기 위해서 필수 명령어만 포함하고 있습니다.

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/

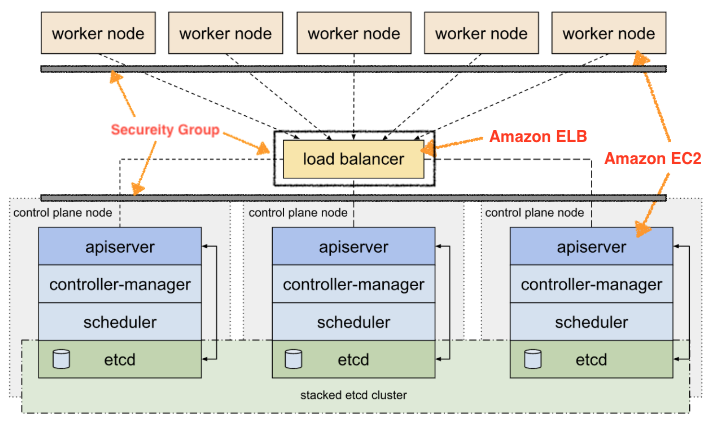

- 구성도

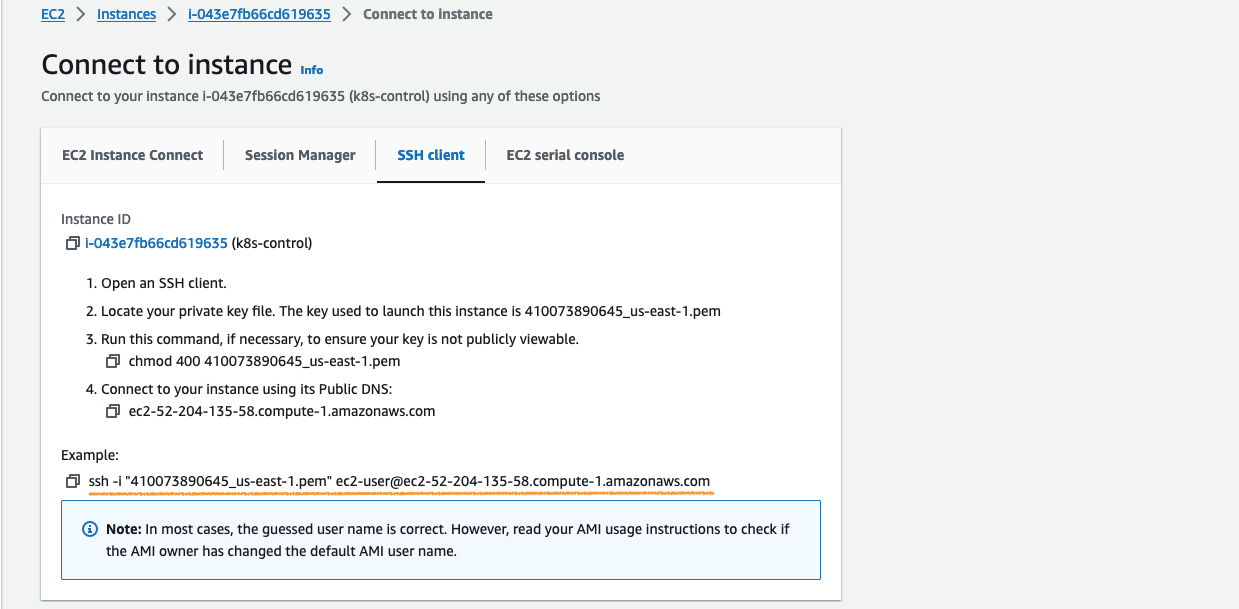

3.1 Amazon EC2 인스턴스 접속

- SSH 접속 명령어 확인

EC2 console > Instances > {Instance} > Connect > SSH client

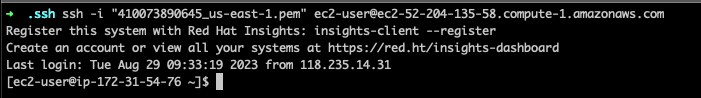

- EC2 인스턴스 접속

2장에서 생성한 Key pair가 저장된 위치에서 접속 명령어를 실행하면 됩니다.

3.2 사전 작업 (K8s Control node + K8s Worker node)

- 스왑 비 활성화

sudo swapoff -a

cat <<EOF | sudo tee /etc/sysctl.conf

vm.swappiness=0

EOF

sudo sysctl --system

3.3 컨테이너 런타임 설치 (K8s Control node + K8s Worker node)

- IPv4를 포워딩하여 iptables가 브리지 된 트래픽을 보게 하기

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# sysctl params required by setup, params persist across reboots

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.ip_forward = 1

EOF

# Apply sysctl params without reboot

sudo sysctl --systemlsmod | grep br_netfilter

lsmod | grep overlay

sysctl net.bridge.bridge-nf-call-iptables net.bridge.bridge-nf-call-ip6tables net.ipv4.ip_forward

- CRI-O 설치

CRI-O가 지원하는 시스템에 Redhat 9.2이 없기 때문에 CentOS 9 Stream을 선택했으며, 설치할 CRI-O 버전으로 1.25를 선택합니다.

https://github.com/cri-o/cri-o/blob/main/install.md#other-yum-based-operating-systems

OS=CentOS_9_Stream

VERSION=1.25

sudo curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable.repo https://download.opensuse.org/repositories/devel:/kubic:/libcontainers:/stable/$OS/devel:kubic:libcontainers:stable.repo

sudo curl -L -o /etc/yum.repos.d/devel:kubic:libcontainers:stable:cri-o:$VERSION.repo https://download.opensuse.org/repositories/devel:kubic:libcontainers:stable:cri-o:$VERSION/$OS/devel:kubic:libcontainers:stable:cri-o:$VERSION.repo

yum repolist | egrep 'repo name|devel'

sudo yum install cri-o -y

sudo systemctl start crio

sudo systemctl status crio

3.3 kubeadm, kubelet and kubectl 설치 (K8s Control node + K8s Worker node)

- Set SELinux to permissive mode

# Set SELinux in permissive mode (effectively disabling it)

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config- Kubernetes package 설치

# This overwrites any existing configuration in /etc/yum.repos.d/kubernetes.repo

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/repodata/repomd.xml.key

exclude=kubelet kubeadm kubectl cri-tools kubernetes-cni

EOF

sudo yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

sudo systemctl status kubelet

3.4 Kubernetes 구성

3.4.1 Kubernetes Control-plane 노드 구성

- Note: Starting with v1.22 and later, when creating a cluster with kubeadm, if the user does not set the cgroupDriver field under KubeletConfiguration, kubeadm defaults it to systemd

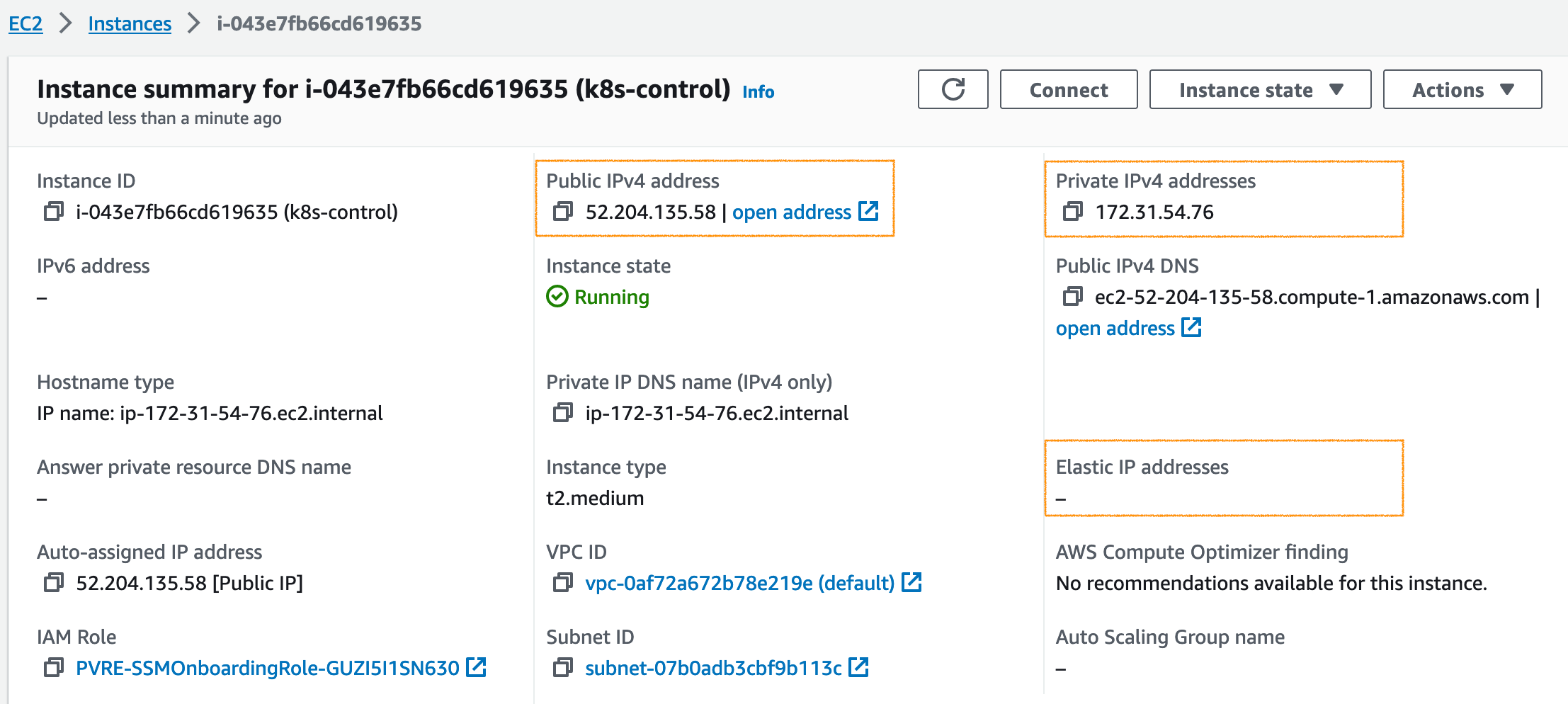

- Control-plane node #1 구성 (Amazon EC2 인스턴스)

control-plane-endpoint 파라미터 값은 Amazon ELB의 DNS name으로 설정하면 됩니다.

apiserver-advertise-address 파라미터 값은 Control node #1의 Private IP 주소를 설정하면 됩니다.

sudo kubeadm init --control-plane-endpoint="lab-k8s-nlb-7d0350440805b3e3.elb.us-east-1.amazonaws.com:6443" \

--apiserver-advertise-address=172.31.54.76 \

--upload-certs \

--pod-network-cidr=10.244.0.0/16mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config- Control-plane node #1 결과

위 명령어가 정상적으로 실행되면 아래와 같은 내용들이 출력됩니다. Control-plane node #2와 Control-plane node #3을 추가할 때 사용할 명령어와 Worker node #1~3을 추가할 때 사용할 명령어가 포함되어 있습니다.

...<생략>...

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join lab-k8s-nlb-7d0350440805b3e3.elb.us-east-1.amazonaws.com:6443 --token 9kqvts.8o3t70bwra02h7zi \

--discovery-token-ca-cert-hash sha256:18f6a892a4753fc30b3b16c39f674d6d54c2d15fcbb6e9bea544258720372419 \

--control-plane --certificate-key b1af64d68dccd20a735dcde059ef1c17716c40bd4f84069e4fd73f1f1572e276

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join lab-k8s-nlb-7d0350440805b3e3.elb.us-east-1.amazonaws.com:6443 --token 9kqvts.8o3t70bwra02h7zi \

--discovery-token-ca-cert-hash sha256:18f6a892a4753fc30b3b16c39f674d6d54c2d15fcbb6e9bea544258720372419

[root@ip-172-31-54-76 .kube]#- Control-plane node #2/3 구성

sudo kubeadm join lab-k8s-nlb-7d0350440805b3e3.elb.us-east-1.amazonaws.com:6443 \

--token 9kqvts.8o3t70bwra02h7zi \

--discovery-token-ca-cert-hash sha256:18f6a892a4753fc30b3b16c39f674d6d54c2d15fcbb6e9bea544258720372419 \

--control-plane \

--certificate-key b1af64d68dccd20a735dcde059ef1c17716c40bd4f84069e4fd73f1f1572e276 \

--apiserver-advertise-address=xxx.xxx.xxx.xxx

3.4.2 Kubernetes Worker node 구성

- Worker node #2/3 구성

sudo kubeadm join lab-k8s-nlb-7d0350440805b3e3.elb.us-east-1.amazonaws.com:6443 \

--token 9kqvts.8o3t70bwra02h7zi \

--discovery-token-ca-cert-hash sha256:18f6a892a4753fc30b3b16c39f674d6d54c2d15fcbb6e9bea544258720372419

3.4.3 CNI(Container Network Interface) 구성

- You must deploy a Container Network Interface (CNI) based Pod network add-on so that your Pods can communicate with each other. Cluster DNS (CoreDNS) will not start up before a network is installed.

- flannel 구성

https://github.com/flannel-io/flannel#deploying-flannel-manually

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

3.4 Kubernetes Client 구성 및 테스트

- Kubectl 실행 환경 구성

Kubectl 명령어를 실행할 환경을 구성합니다. 아래의 예는 Redhat/CentOS 환경인 경우입니다.

sudo yum install -y bash-completion

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

cat <<EOF | tee -a ~/.bash_profile

# Setup autocomplete in bash into the current shell.

source <(kubectl completion bash)

alias k=kubectl

complete -F __start_kubectl k

EOF

source ~/.bash_profile

k version --short=true- Sample POD 배포 및 확인

cat <<EOF | tee nginx.conf

apiVersion: v1

kind: Pod

metadata:

name: my-nginx-pod

spec:

containers:

- name: my-nginx-container

image: nginx:latest

ports:

- containerPort: 80

protocol: TCP

EOF

kubectl apply -f https://raw.githubusercontent.com/kubernetes/website/main/content/en/examples/pods/pod-nginx.yaml[ec2-user@ip-172-31-54-76 ~]$ k get pod

NAME READY STATUS RESTARTS AGE

my-nginx-pod 1/1 Running 0 10h

[ec2-user@ip-172-31-54-76 ~]$

[ec2-user@ip-172-31-54-76 ~]$ k describe pod my-nginx-pod

Name: my-nginx-pod

Namespace: default

Priority: 0

Service Account: default

Node: ip-172-31-78-137.ec2.internal/172.31.78.137

Start Time: Mon, 28 Aug 2023 17:16:16 +0000

Labels: <none>

Annotations: <none>

Status: Running

IP: 10.244.3.2

IPs:

IP: 10.244.3.2

Containers:

my-nginx-container:

Container ID: cri-o://e5a25abad3ae48297d29a7cb4150f620cc3bc56783a8c261d5c731c91bafca0f

Image: nginx:latest

Image ID: docker.io/library/nginx@sha256:104c7c5c54f2685f0f46f3be607ce60da7085da3eaa5ad22d3d9f01594295e9c

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Mon, 28 Aug 2023 17:16:20 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6d8hl (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-6d8hl:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

[ec2-user@ip-172-31-54-76 ~]$

'Kubernetes > Install' 카테고리의 다른 글

| Kubernetes 업그레이드 (1.16 ⇢1.20) 및 호환성 검토 (0) | 2021.10.19 |

|---|---|

| GPU Operator Install on Ubuntu (0) | 2021.09.21 |

| GPU Operator on CentOS (0) | 2021.09.21 |

| Helm (0) | 2021.09.21 |

| MetalLB (0) | 2021.09.15 |

댓글