2021.06.25

1. Kubeflow Jupyter(GPU 할당) 환경에서 Distributed training (Tensorflow)

- Environments

✓ Remote - 개발 환경

Kubeflow Jupyter (GPU)

✓ Remote - 학습 환경

Kubeflow 1.2 (The machine learning toolkit for Kubernetes) / Kubernetes 1.16.15

Harbor 2.2.1 (Private docker registry)

Nvidia V100 / Driver 450.80, cuda 11.2, cuDNN 8.1.0

CentOS 7.8

- 관련 기술

- Tensorflow MirroredStrategy (Data parallelism)

2. 사전작업

a. Remote (Kubernetes cluster)

- Kubeflow 계정 생성 & 리소스 쿼터 설정 (GPU, CPU, Memory, Disk)

- istio-injection disabled

$ kubectl label namespace yoosung-jeon istio-injection=disabled --overwrite

b. Remote (Kubeflow Jupyter)

- Kubeflow Jupyter notebook 생성

i. Kubeflow dashboard login (http://kf.acp.kt.co.kr)

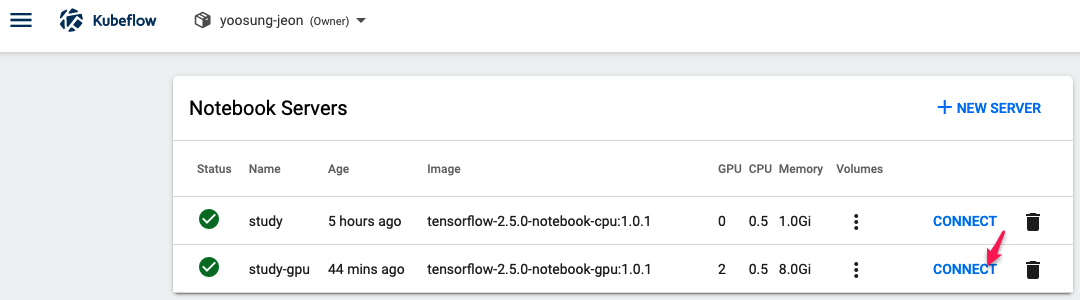

ii. Notebook server 생성

Menu (좌측 상단 ) > Notebook Servers > ‘New Server’

Name: Study-gpu

Image: repo.acp.kt.co.kr/kubeflow/kubeflow-images-private/tensorflow-2.5.0-notebook-gpu:1.0.1

CPU: 0.5

Memory: 8Gi

Num of GPUs: 2

GPU Vendor: NVIDIA

iii. Jupyter 접속

- 참고사항

할당할 수 있는 최대 GPU수는 Kubernetes cluster의 Worker node(서버)들 중에서 Node별 미 사용 중인 GPU수의 최대값

Jupyter를 생성하는 시점부터 해당 GPU는 점유 상태로 다른 어플리케이션에서 사용 불가

$ k get pod -n yoosung-jeon -o wide | egrep 'NAME|study-gpu'

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

study-gpu-0 1/1 Running 0 52m 10.244.4.74 iap10 <none> <none>

$ k exec `k get pod -n gpu-operator-resources -o wide | grep nvidia-driver-daemonset | grep iap10 | awk '{print $1}'` -n gpu-operator-resources -it -- nvidia-smi

Fri Jun 25 06:56:17 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.80.02 Driver Version: 450.80.02 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-PCIE... On | 00000000:3B:00.0 Off | 0 |

| N/A 29C P0 38W / 250W | 31895MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-PCIE... On | 00000000:D8:00.0 Off | 0 |

| N/A 28C P0 35W / 250W | 31895MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 291829 C /usr/bin/python3 31891MiB |

| 1 N/A N/A 291829 C /usr/bin/python3 31891MiB |

+-----------------------------------------------------------------------------+

$

3. Distributed training 코딩

- 소스

3.jupyter-gpu/keras-multiWorkerMirroredStrategy.ipynb

- Code snippet

strategy = tf.distribute.MirroredStrategy()

def build_and_compile_cnn_model():

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(32, 3, activation='relu', input_shape=(28, 28, 1)),

tf.keras.layers.MaxPooling2D(),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(

loss=tf.keras.losses.sparse_categorical_crossentropy,

optimizer=tf.keras.optimizers.SGD(learning_rate=0.001),

metrics=['accuracy'])

return model

with strategy.scope():

multi_gpu_model = build_and_compile_cnn_model()

4. Distributed training 실행

5. 소스

'Kubeflow > Distributed training' 카테고리의 다른 글

| Distributed training 사례 #4 (From KF Jupyter, PyTorch) (0) | 2021.09.27 |

|---|---|

| Distributed training 사례 #2 (From KF Jupyter, Tensorflow) (0) | 2021.09.26 |

| Distributed training 사례 #1 (From MacOS) (0) | 2021.09.26 |

| Distributed training 개요 (0) | 2021.09.26 |

| Running the MNIST using distributed training (0) | 2021.09.24 |

댓글