2021.06.24

1. Kubeflow Jupyter 환경에서 Distributed training (Tensorflow)

- Environments

✓ Remote - 개발 환경

Kubeflow Jupyter

✓ Remote - 학습 환경

Kubeflow 1.2 (The machine learning toolkit for Kubernetes) / Kubernetes 1.16.15

Nexus (Private docker registry)

Nvidia V100 / Driver 450.80, cuda 11.2, cuDNN 8.1.0

CentOS 7.8

- Flow (Tensorflow)

✓ Remote (Kubeflow Jupyter)

a. Docker Image build

b. Docker Image Push

c. Kubeflow TFJob deployment

✓ Remote (Kubeflow / Kubernetes)

d. Kubernetes POD 생성 (Chief POD, Worker #n POD)

Docker image pull & Container 생성, GPU / CPU / Memory 리소스 할당

e. Kubernetes POD 실행

Tensorflow distributed training

- 관련 기술

✓ Kubeflow fairing

✓ Tensorflow MultiWokerMirroredStrategy (Data parallelism)

2. 사전작업

a. Remote (Kubernetes cluster)

- Kubeflow 계정 생성 & 리소스 쿼터 설정 (GPU, CPU, Memory, Disk)

- Private Docker registry credential 생성

Private docker registry는 TLS Certificate를 제공해야 함 (kubeflow 1.2 기준 self-signed certificate 미지원)

$ k create secret docker-registry chelsea-agp-reg-cred -n yoosung-jeon \

--docker-server=repo.chelsea.kt.co.kr --docker-username=agp --docker-password=*****

$ k patch serviceaccount default -n yoosung-jeon -p "{\"imagePullSecrets\": [{\"name\": \"chelsea-agp-reg-cred\"}]}"- Kubernetes PV(Persistent volume) 생성

Distributed training 어플리케이션들에서 공유하여 사용할 디스크

$ vi mnist-mwms-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mnist-mwms-pvc

namespace: yoosung-jeon

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs-sc-iap

$ k apply -f mnist-mwms-pvc.yaml

…

$ cp -r tensorflow_datasets `k get pvc -n yoosung-jeon | grep -w mnist-mwms-pvc | awk '{printf("/nfs_01/yoosung-jeon-%s-%s", $1, $3)}'`

$

b. Remote (Kubeflow Jupyter)

- Kubeflow Jupyter notebook 생성

i. Kubeflow dashboard login (http://kf.acp.kt.co.kr)

ii. Notebook server 생성

Menu (좌측 상단 ) > Notebook Servers > ‘New Server’

Name: Study

Image: repo.acp.kt.co.kr/kubeflow/kubeflow-images-private/tensorflow-2.5.0-notebook-cpu:1.0.1

iii. Jupyter 접속

iv. Terminal 접속

v. Private Docker registry credential 생성

$ mkdir .docker && vi .docker/config.json

{

"auths": {

"repo.chelsea.kt.co.kr": {"auth": "YWdwOm5ldzEy****"}

}

}

$

3. Distributed training 코딩

a. Base image 생성

Distributed training 어플리케이션에서 사용되는 python package 들을 Base image에 추가, 추가할 Package가 없는 경우 생략

yoosungjeon@ysjeon-Dev create-base-docker-image % vi Dockerfile

FROM tensorflow/tensorflow:2.5.0-gpu

COPY requirements.txt .

RUN pip install -r requirements.txt

yoosungjeon@ysjeon-Dev create-base-docker-image % vi requirements.txt

tensorflow-datasets==4.3.0

yoosungjeon@ysjeon-Dev create-base-docker-image % docker build -t repo.chelsea.kt.co.kr/agp/tensorflow-custom:2.5.0-gpu -f Dockerfile .

…

yoosungjeon@ysjeon-Dev create-base-docker-image % docker push repo.chelsea.kt.co.kr/agp/tensorflow-custom:2.5.0-gpu

yoosungjeon@ysjeon-Dev create-base-docker-image %

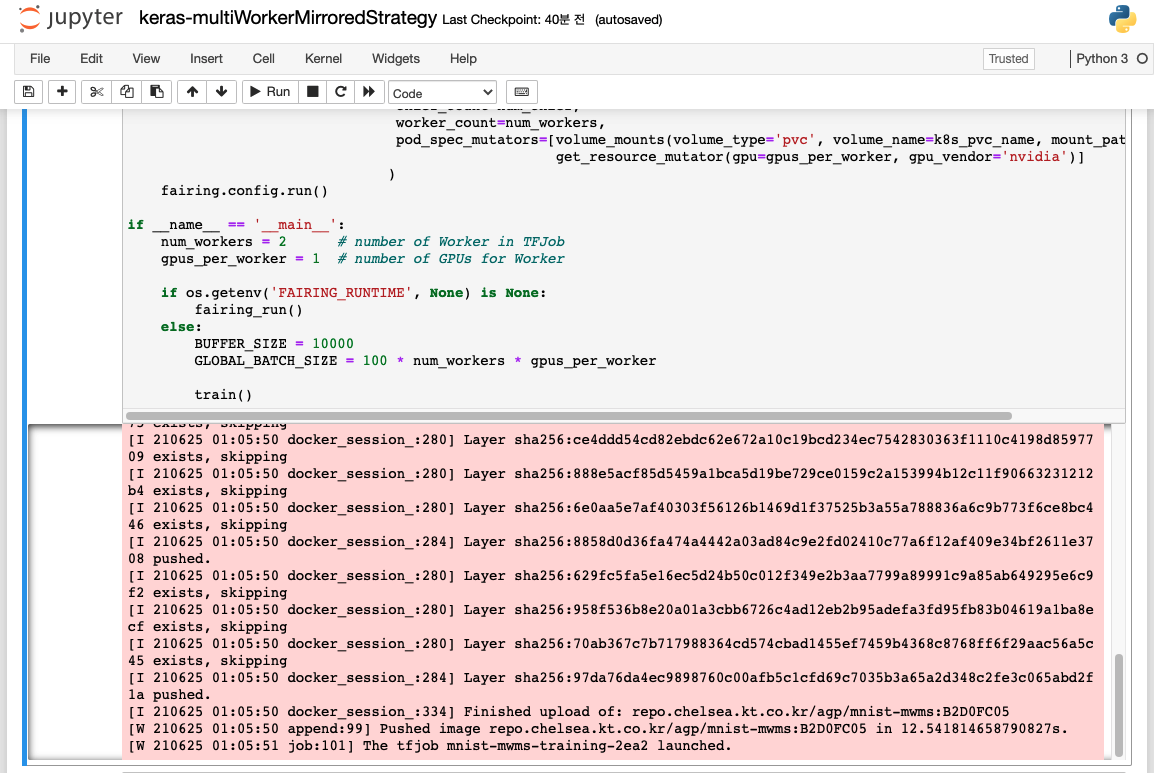

b. Fairing & Distributed training 코드

- 소스

2.jupyter-cpu-fairing/keras-multiWorkerMirroredStrategy.ipynb

- Code snippet

def main():

strategy = tf.distribute.experimental.MultiWorkerMirroredStrategy()

...

with strategy.scope():

multi_worker_model = build_and_compile_cnn_model()

...

def fairing_run():

base_image = 'repo.chelsea.kt.co.kr/agp/tensorflow-custom:2.5.0-gpu'

...

fairing.config.set_builder(name='append', registry=docker_registry,

base_image=base_image, image_name=image_name)

fairing.config.set_deployer(name='tfjob', namespace=k8s_namespace, stream_log=False, job_name=tfjob_name,

chief_count=num_chief, worker_count=num_workers,

pod_spec_mutators=[

volume_mounts(volume_type='pvc', volume_name=k8s_pvc_name, mount_path=mount_dir),

get_resource_mutator(gpu=gpus_per_worker, gpu_vendor='nvidia')]

)

fairing.config.run()

if __name__ == '__main__':

...

if os.getenv('FAIRING_RUNTIME', None) is None:

fairing_run()

else:

main()

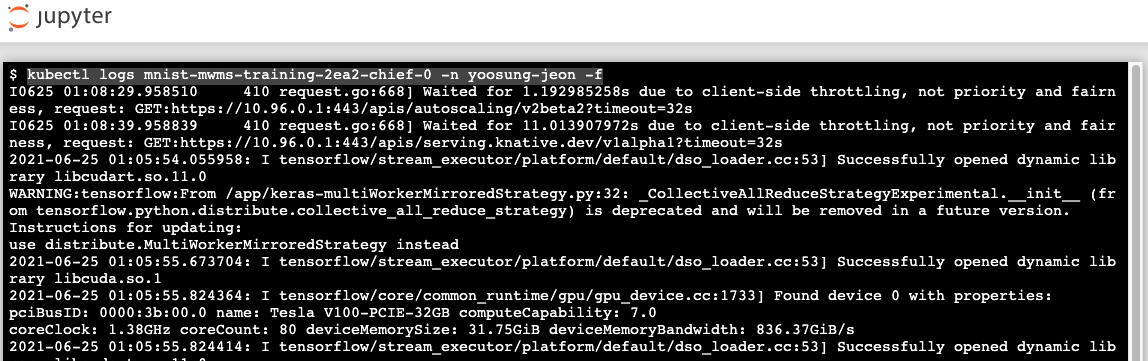

4. Distributed training 실행

a. Remote (Kubeflow Jupyter)

b. Remote (Kubernetes cluster)

$ kubectl get pod -n yoosung-jeon -o wide | egrep 'NAME|mnist-mwms-training-4edc'

NAME READY STATUS RESTARTS AGE IP NODE

mnist-mwms-training-4edc-chief-0 1/1 Running 0 6s 10.244.3.3 iap11

mnist-mwms-training-4edc-worker-0 1/1 Running 0 6s 10.244.4.41 iap10

mnist-mwms-training-4edc-worker-1 1/1 Running 0 6s 10.244.4.43 iap10

$

5. 소스

'Kubeflow > Distributed training' 카테고리의 다른 글

| Distributed training 사례 #4 (From KF Jupyter, PyTorch) (0) | 2021.09.27 |

|---|---|

| Distributed training 사례 #3 (In Jupyter) (0) | 2021.09.26 |

| Distributed training 사례 #1 (From MacOS) (0) | 2021.09.26 |

| Distributed training 개요 (0) | 2021.09.26 |

| Running the MNIST using distributed training (0) | 2021.09.24 |

댓글