2020.09.01

1. 개요

- Strimzi를 모니터링 하기 위해 Prometheus, Grafana, Jaeger를 구성하고 Strimzi 설정을 변경

- Environments

prometheus operator 0.38

Prometheus 2.16

Grafana 6.3.0

Strimzi 0.19

Kafka 2.5.0

2. Creating kafka cluster with metrics

a. Deploying a Kafka cluster with Prometheus metrics configuration

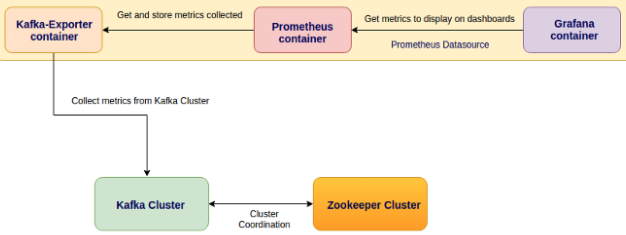

- Strimzi uses the Prometheus JMX Exporter to expose JMX metrics from Kafka and ZooKeeper using an HTTP endpoint, which is then scraped by the Prometheus server.

- To apply the example metrics configuration of relabeling rules to your Kafka cluster

- https://strimzi.io/docs/operators/latest/deploying.html#con-metrics-kafka-options-str

$ wget https://github.com/strimzi/strimzi-kafka-operator/releases/download/0.19.0/strimzi-0.19.0.tar.gz

$ tar xzf strimzi-0.19.0.tar.gz

$ cd strimzi-0.19.0/examples/metrics

$ vi kafka-metrics.yaml

…

kind: Kafka

metadata:

name: emo-dev-cluster

spec:

kafka:

…

listeners:

plain: {}

tls: {}

external: # appended

type: nodeport # appended

tls: false # appended

…

storage:

size: 10Gi # default value : 100Gi

…

zookeeper:

…

storage:

size: 10Gi # default value : 100Gi

…

$ kubectl delete kafka emo-dev-cluster -n kafka-cluster

$ kubectl apply -f kafka-metrics.yaml -n kafka-cluster

$ kubectl get pod -n kafka-cluster

NAME READY STATUS RESTARTS AGE

emo-dev-cluster-entity-operator-5d7fd85ccd-2qhlq 3/3 Running 0 92s

emo-dev-cluster-kafka-0 2/2 Running 0 2m15s

emo-dev-cluster-kafka-1 2/2 Running 0 2m15s

emo-dev-cluster-kafka-2 2/2 Running 0 2m15s

emo-dev-cluster-kafka-exporter-c684f6d94-2t78m 1/1 Running 0 70s

emo-dev-cluster-zookeeper-0 1/1 Running 0 2m59s

emo-dev-cluster-zookeeper-1 1/1 Running 0 2m59s

emo-dev-cluster-zookeeper-2 1/1 Running 0 2m59s

$

3. Deploying Prometheus

a. Deploying the CoreOS Prometheus Operator 0.38

- The Prometheus Operator makes the Prometheus configuration Kubernetes native and manages and operates Prometheus and Alertmanager clusters.

- https://github.com/prometheus-operator/prometheus-operator

$ kubectl create ns monitoring

$ cd strimzi-0.19.0/examples/metrics/

$ curl -s https://raw.githubusercontent.com/coreos/prometheus-operator/release-0.38/bundle.yaml | sed -e 's/namespace: .*/namespace: monitoring/' > prometheus-operator-deployment.yaml

$ kubectl apply -f prometheus-operator-deployment.yaml- Replace the example namespace with your own.

Use the latest master release as shown, or choose a release that is compatible with your version of Kubernetes

Version >=0.18.0 of the Prometheus Operator requires a Kubernetes cluster of version >=1.8.0

https://github.com/prometheus-operator/prometheus-operator/tree/release-0.38

Version >=0.39.0 of the Prometheus Operator requires a Kubernetes cluster of version >=1.16.0

https://github.com/prometheus-operator/prometheus-operator/tree/release-0.42

Prometheus Operator 0.38.2 image가 존재하지 않는다는 에러가 발생되어 변경

$ sed -i 's/0.38.2/0.38.1/' prometheus-operator-deployment.yaml # for linux

$ sed -i '' 's/0.38.2/0.38.1/' prometheus-operator-deployment.yaml # for MacOS

b. Deploying Prometheus 2.16

- Prometheus (https://prometheus.io/)

✓ An open-source monitoring system with a dimensional data model, flexible query language, efficient time series database and modern alerting approach.

✓ Prometheus's main features are:

a multi-dimensional data model with time series data identified by metric name and key/value pairs

PromQL, a flexible query language to leverage this dimensionality

no reliance on distributed storage; single server nodes are autonomous

time series collection happens via a pull model over HTTP

pushing time series is supported via an intermediary gateway

targets are discovered via service discovery or static configuration

multiple modes of graphing and dashboarding support

✓ Prometheus Architecture

- https://strimzi.io/docs/operators/latest/deploying.html#proc-metrics-deploying-prometheus-str

$ cd strimzi-0.19.0/examples/metrics/prometheus-install/

$ sed -i 's/namespace: .*/namespace: monitoring/' prometheus.yaml # for linux

$ sed -i '' 's/namespace: .*/namespace: monitoring/' prometheus.yaml # for MacOS- PodMonitor is used to scrape data directly from pods and is used for Apache Kafka, ZooKeeper, Operators, and Kafka Bridge.

Update the namespaceSelector.matchNames property with the namespace where the pods to scrape the metrics from are running.

$ vi strimzi-pod-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: PodMonitorg

…

metadata:

name: cluster-operator-metrics

…

spec:

namespaceSelector:

matchNames:

- kafka-operator

…

metadata:

name: entity-operator-metrics

…

spec:

namespaceSelector:

matchNames:

- kafka-cluster

…

metadata:

name: bridge-metrics

…

spec:

namespaceSelector:

matchNames:

- kafka-cluster

…

metadata:

name: kafka-metrics

spec:

namespaceSelector:

matchNames:

- kafka-cluster

$ kubectl create secret generic additional-scrape-configs --from-file=../prometheus-additional-properties/prometheus-additional.yaml -n monitoring

$ k describe secrets additional-scrape-configs -n monitoring

Name: additional-scrape-configs

Namespace: monitoring

Labels: <none>

Annotations: <none>

Type: Opaque

Data

====

prometheus-additional.yaml: 2822 bytes

$- Deploy the Prometheus resources

$ kubectl apply -f strimzi-pod-monitor.yaml -n monitoring

podmonitor.monitoring.coreos.com/cluster-operator-metrics created

podmonitor.monitoring.coreos.com/entity-operator-metrics created

podmonitor.monitoring.coreos.com/bridge-metrics created

podmonitor.monitoring.coreos.com/kafka-metrics created

$ kubectl apply -f prometheus-rules.yaml -n monitoring

prometheusrule.monitoring.coreos.com/prometheus-k8s-rules created

$ kubectl apply -f prometheus.yaml -n monitoring

serviceaccount/prometheus-server created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-server created

prometheus.monitoring.coreos.com/prometheus created

$ kubectl get pod prometheus-prometheus-0 -n monitoring

NAME READY STATUS RESTARTS AGE

prometheus-prometheus-0 3/3 Running 1 68s

$ kubectl logs prometheus-operator-5844bc67c6-9hfrm -n monitoring -f

level=info ts=2020-08-28T04:07:29.213527439Z caller=operator.go:1094 component=prometheusoperator msg="sync prometheus" key=monitoring/prometheus

…

$ kubectl logs prometheus-prometheus-0 prometheus -n monitoring

level=info ts=2020-08-28T04:07:51.431Z caller=main.go:331 msg="Starting Prometheus" version="(version=2.16.0, branch=HEAD, revision=b90be6f32a33c03163d700e1452b54454ddce0ec)"

$ kubectl get svc prometheus-operated -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-operated ClusterIP None <none> 9090/TCP 3d2h

$ kubectl port-forward svc/prometheus-operated 9090:9090 -n monitoring --address 0.0.0.0 &

c. Deploying Prometheus Alertmanager

- Prometheus Alertmanager is a plugin for handling alerts and routing them to a notification service.

Alerting rules provide notifications about specific conditions observed in the metrics. Rules are declared on the Prometheus server, but Prometheus Alertmanager is responsible for alert notifications.

Receiver: email, webhook, slack, PagerDuty, Pushover, OpsGenie, VictorOps, WeChat

- https://prometheus.io/docs/alerting/latest/configuration/#receiver

- https://strimzi.io/docs/operators/latest/deploying.html#assembly-metrics-prometheus-alertmanager-str

$ kubectl create secret generic alertmanager-alertmanager --from-file=alertmanager.yaml=../prometheus-alertmanager-config/alert-manager- config.yaml -n monitoring

$ kubectl apply -f alert-manager.yaml -n monitoring- The alert-manager-config.yaml configuration provided with Strimzi configures the Alertmanager to send notifications to a Slack channel.

slack_api_url property with the actual value of the Slack API URL related to the application for the Slack workspace

channel property with the actual Slack channel on which to send notifications

$ k logs alertmanager-alertmanager-0 alertmanager -n my-kafka-project -f

level=error ts=2020-08-28T07:06:36.213Z caller=notify.go:372 component=dispatcher msg="Error on notify" err="cancelling notify retry for \"slack\" due to unrecoverable error: unexpected status code 404: no_team" context_err=null

level=error ts=2020-08-28T07:06:36.213Z caller=dispatch.go:301 component=dispatcher msg="Notify for alerts failed" num_alerts=1 err="cancelling notify retry for \"slack\" due to unrecoverable error: unexpected status code 404: no_team"

level=warn ts=2020-08-28T07:06:40.647Z caller=cluster.go:438 component=cluster msg=refresh result=failure addr=alertmanager-alertmanager-0.alertmanager-operated.my-kafka-project.svc:9094

4. Deploying Grafana

- To provide visualizations of Prometheus metrics, you can use your own Grafana installation or deploy Grafana by applying the grafana.yaml file provided in the examples/metrics directory.

- https://strimzi.io/docs/operators/latest/deploying.html#assembly-metrics-grafana-str

$ cd ~/strimzi-0.19.0/examples/metrics/grafana-install/

$ kubectl apply -f grafana.yaml -n monitoring

deployment.apps/grafana created

service/grafana created

$ kubectl patch service grafana -n monitoring -p '{ "spec": { "type": "NodePort" } }'

$ kubectl get svc grafana -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

grafana NodePort 10.99.22.177 <none> 3000:30807/TCP 3m50s

$- Point a web browser to http://14.52.244.134:30807/login

The default Grafana user name and password are both admin.

Add Prometheus as a data source.

data source > Prometheus

Name: Prometheus-DS

Prometheus server URL: http://prometheus-operated:9090

Save & Test

- Import dashboard from file

+ > Create > Import > Upload.file

File: strimzi-0.19.0/examples/metrics/grafana-dashboards/*.json

Requisite: strimzi-kafka.json, strimzi-zookeeper.json, strimzi-operators.json, strimzi-kafka-exporter.json

Option: strimzi-kafka-bridge.json, strimzi-kafka-connect.json, strimzi-kafka-mirror-maker-2.json

Select a Prometheus data source: Prometheus

- Kafka Grafana Dashboard

5. Deploying Kafka Exporter

- Kafka Exporter is an open source project to enhance monitoring of Apache Kafka brokers and clients.

- Kafka Exporter connects to Kafka as a client and collects different information about topics, partitions and consumer groups. It then exposes this information as a Prometheus metric endpoint.

- Kafka Exporter is provided with Strimzi for deployment with a Kafka cluster to extract additional metrics data from Kafka brokers related to offsets, consumer groups, consumer lag, and topics.

- Consumer lag indicates the difference in the rate of production and consumption of messages.

it is critical to monitor consumer lag to check that it does not become too big.

- https://strimzi.io/docs/operators/latest/deploying.html#proc-kafka-exporter-configuring-str

- logging, enableSaramaLogging, livenessProbe, readinessProbe 설정 (설정하지 않아도 됨)

$ kubectl edit kafka emo-dev-cluster -n kafka-cluster

…

kafkaExporter:

topicRegex: .*

groupRegex: .*

logging: debug

enableSaramaLogging: true

livenessProbe:

initialDelaySeconds: 15

timeoutSeconds: 5

readinessProbe:

initialDelaySeconds: 15

timeoutSeconds: 5

…

$ kubectl logs emo-dev-cluster-kafka-exporter-85f8c8647b-hcf8v -n kafka-cluster

time="2020-09-01T07:38:50Z" level=info msg="Starting kafka_exporter (version=1.2.0, branch=HEAD, revision=830660212e6c109e69dcb1cb58f5159fe3b38903)" source="kafka_exporter.go:474"

time="2020-09-01T07:38:50Z" level=info msg="Build context (go=go1.10.3, user=root@981cde178ac4, date=20180707-14:34:48)" source="kafka_exporter.go:475"

[sarama] 2020/09/01 07:38:50 Initializing new client

[sarama] 2020/09/01 07:38:50 client/metadata fetching metadata for all topics from broker emo-dev-cluster-kafka-bootstrap:9091

[sarama] 2020/09/01 07:38:50 Connected to broker at emo-dev-cluster-kafka-bootstrap:9091 (unregistered)

[sarama] 2020/09/01 07:38:50 client/brokers registered new broker #0 at emo-dev-cluster-kafka-0.emo-dev-cluster-kafka-brokers.kafka-cluster.svc:9091

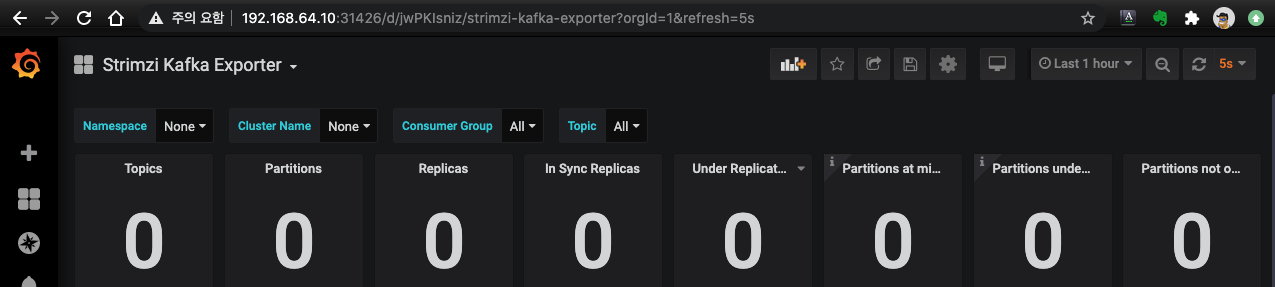

…- Kafka Exporter Dashboard

- Troubleshooting

▷ Problem: Namespace를 포함하여 데이터가 조회되지 않음.

▷ Cause:

- 메뉴: Strimzi Kafka Exporter > Dashboard settings > Variables

Variable: $kubernetes_namespace / Definition query_result(kafka_consumergroup_current_offset)

- Explore 메뉴에서 수집 내용 확인 (kafka_topic_partition_current_offset), 참고로 조회 되지 않았을 때는 데이터가 없었음.

▷ Solution:

Consumer group명을 가진 클라이언트가 최초 접속 이후 조회 됨

$ bin/kafka-console-producer.sh --broker-list 14.52.244.208:30170,14.52.244.210:30170,14.52.244.211:30170 --topic my-topic

6. Distributed tracing

- In Strimzi, tracing facilitates the end-to-end tracking of messages: from source systems to Kafka, and then from Kafka to target systems and applications.

- Support for tracing is built in to the following components:

✓ Kafka Connect (including Kafka Connect with Source2Image support)

✓ MirrorMaker / MirrorMaker 2.0

✓ Strimzi Kafka Bridge

- Strimzi uses the OpenTracing and Jaeger projects.

✓ OpenTracing is an API specification that is independent from the tracing or monitoring system.i

✓ Jaeger is a tracing system for microservices-based distributed systems.

- https://strimzi.io/docs/operators/master/using.html#assembly-distributed-tracing-str

'Kubernetes > Message Broker' 카테고리의 다른 글

| Strimzi #4 Performance test (0) | 2021.09.22 |

|---|---|

| Strimzi #2 Configuration (0) | 2021.09.21 |

| Strimzi #1 Overview (0.19.0) (0) | 2021.09.21 |

| RabbitMQ Cluster Operator (0) | 2021.09.21 |

댓글