2020.12.23

1. GPU Monitor

- Prometheus

Prometheus is deployed along with kube-state-metrics and node_exporter to expose cluster-level metrics for Kubernetes API objects and node-level metrics such as CPU utilization

- DCGM-Exporter (https://github.com/NVIDIA/gpu-monitoring-tools)

It exposes GPU metrics exporter for Prometheus leveraging NVIDIA DCGM.

- kube-state-metrics

kube-state-metrics is a simple service that listens to the Kubernetes API server and generates metrics about the state of the objects.

- node-exporter

Prometheus exporter for hardware and OS metrics exposed by *NIX kernels, written in Go with pluggable metric collectors.

- Reference

DCGM (https://developer.nvidia.com/dcgm)

▷ NVIDIA Data Center GPU Manager (DCGM) is a suite of tools for managing and monitoring NVIDIA datacenter GPUs in cluster environments.

▷ It includes active health monitoring, comprehensive diagnostics, system alerts and governance policies including power and clock management.

▷ DCGM also integrates into the Kubernetes ecosystem using DCGM-Exporter to provide rich GPU telemetry in containerized environments.

2. Environments

- NVIDIA DCGM-exporter 2.0.13-2.1.2 (in GPU Operator v1.4.0)

- Node-exporter v1.0.1

- kube-state-metrics v1.9.7

- Prometheus operator stack 0.44.0

- Kubernetes 1.16.15

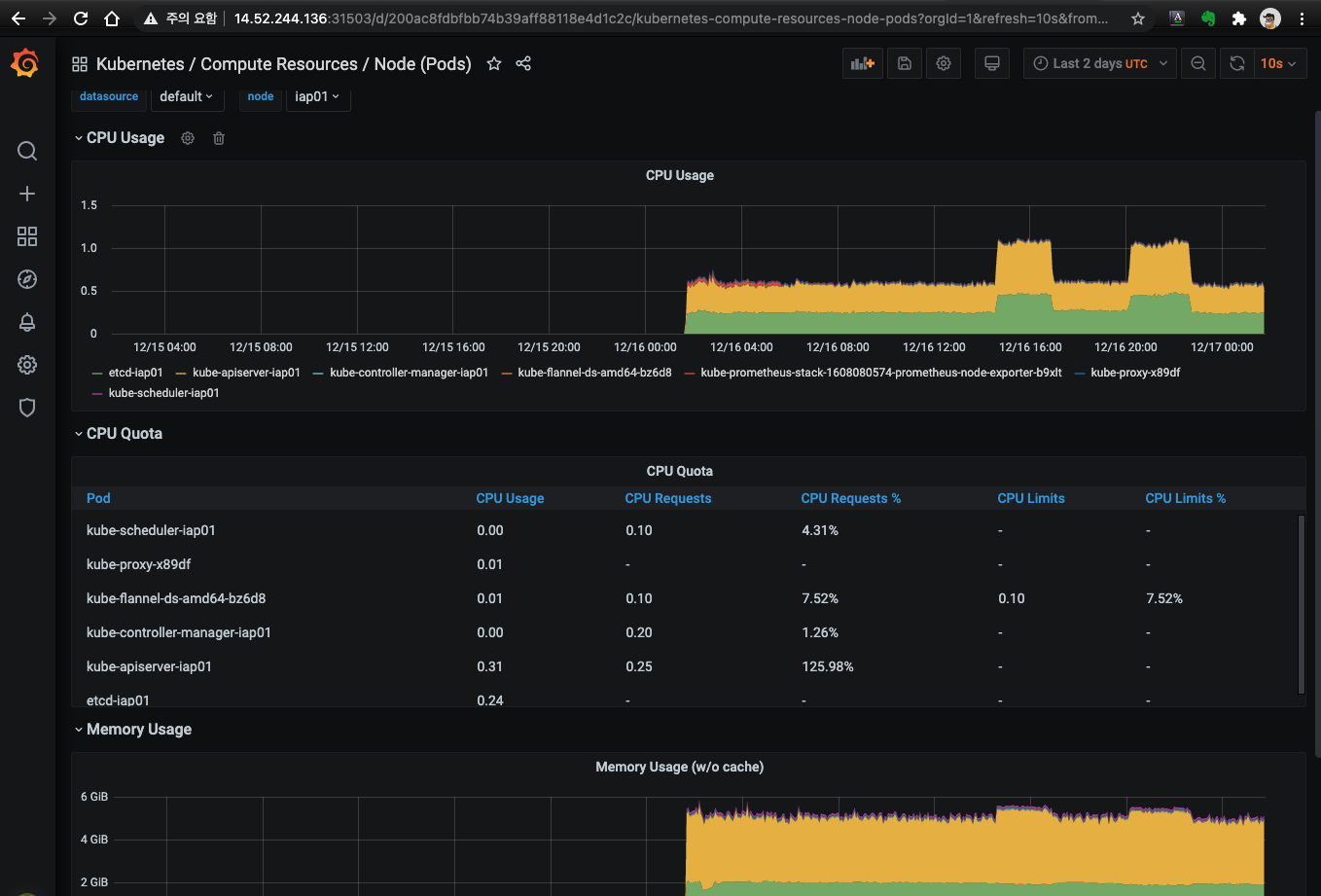

3. Using dashboard (Grafana)

- URL: http://14.52.244.136:30503

$ k get svc kube-prometheus-stack-1608276926-grafana -n gpu-monitor

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-prometheus-stack-1608276926-grafana NodePort 10.102.36.19 <none> 80:31503/TCP 5m57s

$- Username / Password

✓ Username: admin

✓ Password: prom-operator

$ helm inspect values prometheus-community/kube-prometheus-stack > kube-prometheus-stack.values

$ grep adminPassword kube-prometheus-stack.values

adminPassword: prom-operator

$- NVIDIA DCGM Expoerter Dashboard

- Node exporter

- kube-state-metrics

4. Configure GPU monitor

# 단일 kube-prometheus-stack 구성하고 제공할 SW를 수용(Metric 수집, Grafana dashboard 제공)하는 방식으로 구성 할 것

# 참고문서 : "Prometheus: kube-prometheus-stack"

https://developer.nvidia.com/blog/monitoring-gpus-in-kubernetes-with-dcgm/

https://docs.nvidia.com/datacenter/cloud-native/kubernetes/dcgme2e.html#gpu-telemetry

a. Setting up a GPU monitoring solution

$ helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

$ helm repo update

$ helm inspect values prometheus-community/kube-prometheus-stack > kube-prometheus-stack.values

https://docs.nvidia.com/datacenter/cloud-native/gpu-operator/getting-started.html#setting-up-prometheus

$ vi kube-prometheus-stack.values

…

prometheus:

…

type: NodePort # changed this line

prometheusSpec: 1680

…

## If true, a nil or {} value for prometheus.prometheusSpec.serviceMonitorSelector will cause the

## prometheus resource to be created with selectors based on values in the helm deployment,

## which will also match the servicemonitors created

##

serviceMonitorSelectorNilUsesHelmValues: false # changed this line

…

## AdditionalScrapeConfigs allows specifying additional Prometheus scrape configurations. Scrape configurations

## are appended to the configurations generated by the Prometheus Operator. Job configurations must have the form

## as specified in the official Prometheus documentation:

additionalScrapeConfigs: []

additionalScrapeConfigs: # append below lines

- job_name: gpu-metrics

scrape_interval: 10s

metrics_path: /metrics

scheme: http

kubernetes_sd_configs:

- role: endpoints

namespaces:

names:

- gpu-operator-resources

relabel_configs:

- source_labels: [__meta_kubernetes_pod_node_name]

action: replace

target_label: kubernetes_node

…

$ helm install prometheus-community/kube-prometheus-stack --create-namespace --namespace gpu-monitor --generate-name \

--values kube-prometheus-stack.values

NAME: kube-prometheus-stack-1608276926

LAST DEPLOYED: Fri Dec 18 16:35:30 2020

...

$

$ helm list -A | egrep "NAME|kube-prometheus-stack"

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

kube-prometheus-stack-1608276926 gpu-monitor 1 2020-12-18 16:35:30.153397249 +0900 KST deployed kube-prometheus-stack-12.8.0 0.44.0

$- Troubleshooting #1

▷ Problem

$ helm install prometheus-community/kube-prometheus-stack --create-namespace --namespace gpu-monitoring --generate-name --set prometheus.service.type=NodePort --set prometheus.prometheusSpec.serviceMonitorSelectorNilUsesHelmValues=false

Error: unable to build kubernetes objects from release manifest: error validating "": error validating data: [ValidationError(Prometheus.spec): unknown field "probeNamespaceSelector" in com.coreos.monitoring.v1.Prometheus.spec, ValidationError(Prometheus.spec): unknown field "probeSelector" in com.coreos.monitoring.v1.Prometheus.spec]

$▷ Cause

$ k get crd | grep prometheuses

prometheuses.monitoring.coreos.com 2020-09-01T06:42:02Z

$ k get crd prometheuses.monitoring.coreos.com -o yaml | egrep "probeNamespaceSelector:|probeSelector:"

$▷ Solution

$ kubectl apply -f https://raw.githubusercontent.com/prometheus-operator/prometheus-operator/v0.42.0/example/prometheus-operator-crd/monitoring.coreos.com_prometheuses.yaml

$ k get crd prometheuses.monitoring.coreos.com -o yaml | egrep "probeNamespaceSelector:|probeSelector:"

probeNamespaceSelector:

probeSelector:

$- Troubleshooting #2

▷ Problem: Strimzi에서 사용하는 prometheus operator와 충돌이 발생되어 지속적으로 Terminating 되는 현상 발생

▷ Cause

- Prometheus operator

$ k get pod -A | grep prometheus | grep operator

gpu-monitoring kube-prometheus-stack-1608-operator-6695db5768-wqc87 1/1 Running 0 14h

monitoring prometheus-operator-d85689897-t8zdg 1/1 Running 0 7d23h

$

- GPU

# helm install prometheus-community/kube-prometheus-stack …

# prometheus operator 0.44

# Prometheus v2.22.1

$ k get pod prometheus-kube-prometheus-stack-1608-prometheus-0 -n gpu-monitoring -w

NAME READY STATUS RESTARTS AGE

prometheus-kube-prometheus-stack-1608-prometheus-0 0/2 Terminating 0 19s

prometheus-kube-prometheus-stack-1608-prometheus-0 0/2 Pending 0 0s

prometheus-kube-prometheus-stack-1608-prometheus-0 0/2 Terminating 0 5s

prometheus-kube-prometheus-stack-1608-prometheus-0 0/2 Pending 0 0s

prometheus-kube-prometheus-stack-1608-prometheus-0 0/2 Terminating 0 9s

…

- Strimzi

# prometheus operator 0.38.1

# Prometheus 2.16

# Version >=0.18.0 of the Prometheus Operator requires a Kubernetes cluster of version >=1.8.0

# Version >=0.39.0 of the Prometheus Operator requires a Kubernetes cluster of version >=1.16.0

$ k get pod prometheus-prometheus-0 -n monitoring -w

NAME READY STATUS RESTARTS AGE

prometheus-prometheus-0 0/2 Pending 0 1s

prometheus-prometheus-0 0/2 Terminating 0 3s▷ Solution: $ k delete ns monitoring

b. Installing dcgm-exporter

- GPU Operator에서 설치된 nvidia-dcgm-exporter pod를 사용하므로 설치 불 필요

$ k get pod -n gpu-operator-resources | egrep 'NAME|nvidia-dcgm-exporter'

NAME READY STATUS RESTARTS AGE

nvidia-dcgm-exporter-426f4 1/1 Running 0 14m

nvidia-dcgm-exporter-wxkq6 1/1 Running 0 14m

$

$ helm inspect values gpu-helm-charts/dcgm-exporter > dcgm-exporter.yaml

$ helm install gpu-helm-charts/dcgm-exporter --generate-name --namespace gpu-monitor --set-string nodeSelector."nvidia\\.com/gpu\\.present"=true # --dry-run --debug

NAME: dcgm-exporter-1608086181

LAST DEPLOYED: Wed Dec 16 11:36:22 2020

NAMESPACE: gpu-monitor

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

1. Get the application URL by running these commands:

export POD_NAME=$(kubectl get pods -n gpu-monitor -l "app.kubernetes.io/name=dcgm-exporter,app.kubernetes.io/instance=dcgm-exporter-1608086181" -o jsonpath="{.items[0].metadata.name}")

kubectl -n gpu-monitor port-forward $POD_NAME 8080:9400 &

echo "Visit http://127.0.0.1:8080/metrics to use your application"

$

c. Checking Prometheus

- URL: http://14.52.244.136:30090/

d. Setting dashboard

$ k patch svc kube-prometheus-stack-1608276926-grafana -n gpu-monitor -p '{ "spec": { "type": "NodePort" } }'- To now start a Grafana dashboard for GPU metrics, import the reference NVIDIA dashboard from Grafana Dashboards.

e. List dashboard

'Kubernetes > Monitoring' 카테고리의 다른 글

| kube-prometheus-stack (0) | 2021.09.21 |

|---|---|

| Elastic Observability (0) | 2021.09.20 |

| Elastic Observability - filebeat/metricbeat POD 오류 (0) | 2021.09.15 |

| Dashboard on bare-metal (0) | 2021.09.15 |

| Dashboard on GCE (0) | 2021.09.15 |

댓글