1. Kubeflow KFServing?

- Kubeflow supports two model serving systems that allow multi-framework model serving: KFServing and Seldon Core.

- KFServing enables serverless inferencing on Kubernetes

✓ Encapsulate the complexity of autoscaling, networking, health checking, and server configuration

to bring cutting edge serving features like GPU autoscaling, scale to zero, and canary rollouts to your ML deployments.

- Kubeflow 1.2 includes KFServing v0.4.1.

Knative Serving v0.14.3, istio v1.3

- Kubeflow 1.3 includes KFServing v0.5.1 which promoted the core InferenceService API from v1alpha2 to v1beta1 stable and added v1alpha1 version of Multi-Model Serving.

Knative Serving v0.17.4, Istio v1.9

- Reference

https://v1-2-branch.kubeflow.org/docs/components/kfserving/kfserving/

https://www.kangwoo.kr/2020/04/11/kubeflow-kfserving-%EA%B0%9C%EC%9A%94/

2. Inference service 배포

- Deploy tensorflow models with out-of-the-box model servers

https://github.com/kubeflow/kfserving/tree/master/docs/samples/v1alpha2/tensorflow

https://github.com/kubeflow/kfserving/tree/master/docs/samples/v1beta1/tensorflow ⇠ Kubeflow 1.3

$ vi tensorflow.yaml

apiVersion: "serving.kubeflow.org/v1alpha2"

kind: "InferenceService"

metadata:

name: "flowers-sample"

namespace: yoosung-jeon

spec:

default:

predictor:

tensorflow:

storageUri: "gs://kfserving-samples/models/tensorflow/flowers"

$ k apply -f tensorflow.yaml

...

$

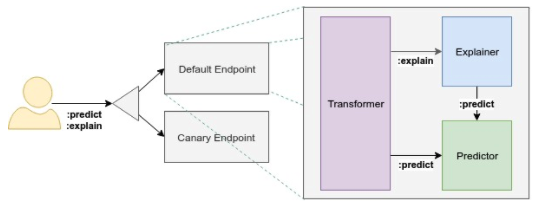

- InferenceService의 data plane

✓ Endpoint: “Default”와 “Canary” 엔드포인트

✓ Component: 각 엔드 포인트는 “예측자(predictor)”, “설명자(explainer)”및 “변환기(transformer)”와 같은 여러 컴포넌트로 구성

Predictor: InferenceService의 핵심 컴포넌트. 네트워크 엔드포인트에서 사용 가능하게 하는 모델 및 모델 서버

Explainer: 모델이 어떻게 예측을 했는지에 대한 설명을 제공. 사용자는 자신들이 가지고 있는 자체 설명 컨테이너를 정의할 수 있음. 일반적인 사용 사례의 경우 KFServing은 Alibi와 같은 기본 Explainer를 제공

Transformer: 예측 및 설명 워크 플로우 전에 사전 및 사후 처리 단계를 정의. Explainer 과 마찬가지로 관련 환경 변수로 구성. 일반적인 사용 사례의 경우 KFServing은 Feast와 같은 기본 Transformer를 제공

- Inference service 호출

Dex (OIDC)가 구성되어 있기 때문에 배포한 Inference service를 호출하면 인증을 요청하는 URL로 리다이렉트 된다. Dex 인증을 우회하는 설정이 필요하다. 'Dex 인증 / 우회' 문서를 참조하기 바란다.

$ MODEL_NAME=flowers-sample

$ SERVICE_HOSTNAME=$(kubectl get inferenceservice ${MODEL_NAME} -o jsonpath='{.status.url}' | cut -d "/" -f 3)

$ INGRESS_HOST=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

$ INGRESS_PORT=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.spec.ports[?(@.name=="http2")].port}')

$ INPUT_PATH=@./input.json

$ echo "SERVICE_HOSTNAME=$SERVICE_HOSTNAME"

SERVICE_HOSTNAME=flowers-sample.yoosung-jeon.example.com

$ echo "INGRESS_HOST=$INGRESS_HOST, INGRESS_PORT=$INGRESS_PORT"

INGRESS_HOST=14.52.244.137, INGRESS_PORT=80

$$ curl -v -H "Host: ${SERVICE_HOSTNAME}" http://${INGRESS_HOST}:${INGRESS_PORT}/v1/models/$MODEL_NAME:predict -d $INPUT_PATH

* About to connect() to 14.52.244.137 port 80 (#0)

* Trying 14.52.244.137...

* Connected to 14.52.244.137 (14.52.244.137) port 80 (#0)

> POST /v1/models/flowers-sample:predict HTTP/1.1

> User-Agent: curl/7.29.0

> Accept: */*

> Host: flowers-sample.yoosung-jeon.example.com

> Content-Length: 16201

> Content-Type: application/x-www-form-urlencoded

> Expect: 100-continue

>

< HTTP/1.1 100 Continue

< HTTP/1.1 200 OK

< content-length: 222

< content-type: application/json

< date: Wed, 21 Jul 2021 06:03:18 GMT

< x-envoy-upstream-service-time: 2049

< server: istio-envoy

<

{

"predictions": [

{

"scores": [0.999114931, 9.20987877e-05, 0.000136786475, 0.000337258185, 0.000300532876, 1.84813962e-05],

"prediction": 0,

"key": " 1"

}

]

* Connection #0 to host 14.52.244.137 left intact

}

$

3. Custom Domain 변경

- Inference service를 배포하면 호출할 URL은 '{Inference service name}.{namespace}.example.com' 형식이 된다.

- 'example.com' domain을 변경하고자 할 경우는 'Knative - Custom domain 변경' 문서를 참조하기 바란다.

$ k get inferenceservices.serving.kubeflow.org -n yoosung-jeon

NAME URL READY DEFAULT TRAFFIC CANARY TRAFFIC AGE

bert-large http://bert-large.yoosung-jeon.kf-serv.acp.kt.co.kr True 100 5d16h

flowers-sample http://flowers-sample.yoosung-jeon.kf-serv.acp.kt.co.kr True 100 27m

$

4. Tensorflow ModelServer 버전 지정

- 기본으로 사용되는 Tensorflow ModelServer의 버전은 1.14.0이다.

$ k describe cm inferenceservice-config -n kubeflow | grep tensorflow -A 3

"tensorflow": {

"image": "tensorflow/serving",

"defaultImageVersion": "1.14.0",

"defaultGpuImageVersion": "1.14.0-gpu"

},

$ k exec flowers-sample-predictor-default-5jsk4-deployment-78cc8b8bfzkfc -c kfserving-container -n yoosung-jeon -it -- tensorflow_model_server --version

TensorFlow ModelServer: 1.14.0-rc0

TensorFlow Library: 1.14.0

$

- runtimeVersion으로 사용할 Tensorflow ModelServer의 버전을 지정하면 된다.

$ vi tensorflow.yaml

apiVersion: "serving.kubeflow.org/v1alpha2"

kind: "InferenceService"

metadata:

name: "flowers-sample"

namespace: yoosung-jeon

spec:

default:

predictor:

tensorflow:

runtimeVersion: "2.5.1"

storageUri: "gs://kfserving-samples/models/tensorflow/flowers"

$ k apply -f tensorflow.yaml

...

$ k get pod -n yoosung-jeon -l serving.kubeflow.org/inferenceservice=flowers-sample

NAME READY STATUS RESTARTS AGE

flowers-sample-predictor-default-zwwvl-deployment-f668b4498294f 2/2 Running 3 2d11h

$ k exec flowers-sample-predictor-default-zwwvl-deployment-f668b4498294f -c kfserving-container -n yoosung-jeon -it -- tensorflow_model_server --version

TensorFlow ModelServer: 2.5.1-rc3

TensorFlow Library: 2.5.0

$

5. GPU 사용

- GPU를 사용하는 Tensorflow ModelServer를 지정하고, 사용할 GPU 리소스 수량을 지정한다.

runtimeVersion: "2.5.1-gpu"

nvidia.com/gpu: 1

$ vi tensorflow.yaml

...

spec:

default:

predictor:

tensorflow:

runtimeVersion: "2.5.1-gpu"

resources:

limits:

cpu: "1"

memory: 16Gi

nvidia.com/gpu: 1

requests:

cpu: "1"

memory: 16Gi

nvidia.com/gpu: 1

…

$ k apply -f tensorflow.yaml

...

$ k logs flowers-sample-predictor-default-cxqnw-deployment-5d94b997s4jfr -n yoosung-jeon -c kfserving-container

…

2021-08-13 02:26:35.968290: I external/org_tensorflow/tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

…

$

- 배포한 Inference service는 GPU 1개를 할당받아서 점유하고 있다.

$ k exec nvidia-driver-daemonset-txb8j -n gpu-operator-resources -it -- nvidia-smi

Fri Aug 13 02:59:44 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 450.80.02 Driver Version: 450.80.02 CUDA Version: 11.0 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla V100-PCIE... On | 00000000:3B:00.0 Off | 0 |

| N/A 28C P0 38W / 250W | 31461MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

| 1 Tesla V100-PCIE... On | 00000000:D8:00.0 Off | 0 |

| N/A 25C P0 24W / 250W | 0MiB / 32510MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 160113 C ...n/tensorflow_model_server 31457MiB |

+-----------------------------------------------------------------------------+

$

6. Model Storages 저장

- PVC 생성 및 모델 배포

✓ 모델을 배포할 PVC를 생성한다. 본 예제에서는 Storage class로 nfs-client-provisioner를 사용하고 있으며, 생성된 PV에 직접 접근이 가능한다.

NAS의 마운트 포인트는 '/nfs_01'이고, kfserving-pvc의 PV는 'yoosung-jeon-kfserving-pvc-pvc-a83bf69f-3b8f-41be-ba51-e76402a36f6d'이다.

참고문서: NFS-Client Provisioner

✓ gsutil 명령어로 Google storage에 있는 Tensorflow flowers 모델을 복사한다.

✓ 모델 디렉토리 구조는 'model_name/{numbers}/*' 형식을 준수해야 한다.

$ vi kfserving-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: kfserving-pvc

namespace: yoosung-jeon

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: nfs-sc-iap

$ k apply -f kfserving-pvc.yaml

...

$ cd /nfs_01/yoosung-jeon-kfserving-pvc-pvc-a83bf69f-3b8f-41be-ba51-e76402a36f6d

$ gsutil cp -r gs://kfserving-samples/models/tensorflow/flowers .

Copying gs://kfserving-samples/models/tensorflow/flowers/0001/saved_model.pb...

Copying gs://kfserving-samples/models/tensorflow/flowers/0001/variables/variables.data-00000-of-00001...

Copying gs://kfserving-samples/models/tensorflow/flowers/0001/variables/variables.index...

\ [3 files][109.5 MiB/109.5 MiB] 2.8 MiB/s

Operation completed over 3 objects/109.5 MiB.

$ tree flowers

flowers

└── 0001

├── saved_model.pb

└── variables

├── variables.data-00000-of-00001

└── variables.index

2 directories, 3 files

$

- storageUri에 'pvc://{PVC name}/{model}' 형식으로 설정한다.

모델이 다수의 숫자 디렉토리가 가지고 있는 경우에는 가장 큰 숫자의 디렉토리를 사용한다.

$ vi tensorflow.yaml

apiVersion: "serving.kubeflow.org/v1alpha2"

kind: "InferenceService"

metadata:

name: "flowers-sample"

namespace: yoosung-jeon

spec:

default:

predictor:

tensorflow:

storageUri: "pvc://kfserving-pvc/flowers"

runtimeVersion: "2.5.1"

$ k apply -f tensorflow.yaml

…

$ k logs flowers-sample-predictor-default-7wjb9-deployment-848cbb7btn6gn -n yoosung-jeon -c storage-initializer

[I 210813 07:25:05 initializer-entrypoint:13] Initializing, args: src_uri [/mnt/pvc/flowers] dest_path[ [/mnt/models]

[I 210813 07:25:05 storage:35] Copying contents of /mnt/pvc/flowers to local

[I 210813 07:25:05 storage:205] Linking: /mnt/pvc/flowers/0001 to /mnt/models/0001

$

7. Autoscaling

- KFServing은 Knative를 이용하여 autoscaling을 지원한다. autoscaling에 대한 자세한 내용은 'Knative - Autoscaling #1 (개념)' 문서를 참조하기 바란다.

$ vi tensorflow-autoscaler.yaml

apiVersion: "serving.kubeflow.org/v1alpha2"

kind: "InferenceService"

metadata:

name: "flowers-sample"

namespace: yoosung-jeon

annotations:

autoscaling.knative.dev/target: "3"

autoscaling.knative.dev/minSacle: "1"

autoscaling.knative.dev/maxSacle: "10"

spec:

default:

predictor:

tensorflow:

storageUri: "pvc://kfserving-pvc/flowers"

runtimeVersion: "2.5.1"

$ k apply -f tensorflow-autoscaler.yaml

...

$ k get pod -l app=flowers-sample-predictor-default-zwwvl -n yoosung-jeon

NAME READY STATUS RESTARTS AGE

flowers-sample-predictor-default-zwwvl-deployment-8cc7d458pkfvx 2/2 Running 0 3h21m

$

- hey 툴을 이용하여 30초 동안 동시 5 세션의 부하를 발생시키면, Autoscaling되는 것을 확인 할 수 있다.

$ ./hey_linux_amd64 -z 30s -c 5 -m POST -D ./input.json http://flowers-sample.yoosung-jeon.kf-serv.acp.kt.co.kr:80/v1/models/flowers-sample:predict

Summary:

Total: 33.4836 secs

Slowest: 16.0879 secs

Fastest: 1.4547 secs

Average: 5.1455 secs

Requests/sec: 0.9258

Total data: 6851 bytes

Size/request: 221 bytes

Response time histogram:

1.455 [1] |■■■■

2.918 [6] |■■■■■■■■■■■■■■■■■■■■■■

4.381 [7] |■■■■■■■■■■■■■■■■■■■■■■■■■

5.845 [11] |■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■■

7.308 [1] |■■■■

8.771 [1] |■■■■

10.235 [2] |■■■■■■■

11.698 [0] |

13.161 [1] |■■■■

14.625 [0] |

16.088 [1] |■■■■

Latency distribution:

10% in 2.5903 secs

25% in 3.0997 secs

50% in 4.8862 secs

75% in 5.5104 secs

90% in 9.8968 secs

95% in 16.0879 secs

0% in 0.0000 secs

Details (average, fastest, slowest):

DNS+dialup: 0.0115 secs, 1.4547 secs, 16.0879 secs

DNS-lookup: 0.0113 secs, 0.0000 secs, 0.0700 secs

req write: 0.0002 secs, 0.0001 secs, 0.0004 secs

resp wait: 5.1336 secs, 1.4541 secs, 16.0160 secs

resp read: 0.0002 secs, 0.0000 secs, 0.0005 secs

Status code distribution:

[200] 31 responses

$ k get pod -l app=flowers-sample-predictor-default-zwwvl -n yoosung-jeon

NAME READY STATUS RESTARTS AGE

flowers-sample-predictor-default-zwwvl-deployment-8cc7d4586ggxv 2/2 Running 0 20s

flowers-sample-predictor-default-zwwvl-deployment-8cc7d4589c96m 2/2 Running 0 24s

flowers-sample-predictor-default-zwwvl-deployment-8cc7d458pkfvx 2/2 Running 0 3h21m

$

'Kubeflow > Management' 카테고리의 다른 글

| KFServing - Canary rollout 테스트 (0) | 2021.10.17 |

|---|---|

| Dex 인증 / 우회 (0) | 2021.09.29 |

| Kubeflow Jupyter Custom Image 추가 (0) | 2021.09.24 |

댓글